Do you want to enable Azure AD integration with your Azure Kubernetes Service (AKS) but struggle with 403 Forbidden error messages in the Azure portal when trying to view Kubernetes objects? If you’re having these issues this could be a good post for you. We’ll look at what causes this and 3 solutions to fix it.

Problem

Recently I’ve been on a couple of calls where AKS users were baffled by how to browse AKS objects via the Azure Portal once AAD integration was enabled. In this post we’ll walk through setting up a VERY basic cluster and enabling AAD integration.

Once AAD integration in enabled on a cluster it cannot be undone. You should test it just like any other feature to ensure it suits your needs before moving to production.

First, things first let’s get a basic Kubernetes cluster created with AAD integration enabled. In this example we aren’t going to explore any specific settings in AKS besides the AAD integration bits so if you are looking for specific configurations for AKS you’ll want to check out the AKS Best Practices.

>> az group create -n MyExampleRG -l eastus

>> az aks create -g MyExampleRG -n MyAKSCluster --enable-aad

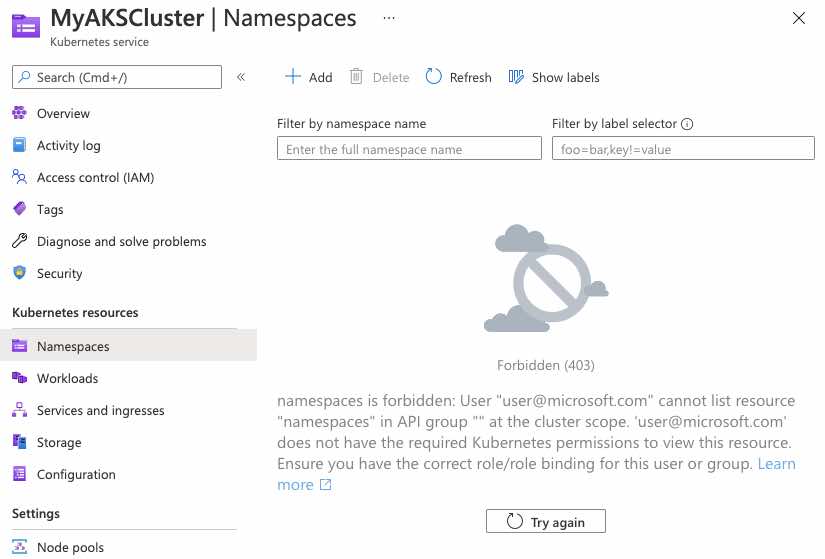

We now have an AKS cluster names MyAKSCluster that has been integrated with Azure AD. Right away if you try to view this cluster in the AKS portal you’ll see some limitations on what you can see inside the cluster even though you created it!

Additionally, if we pull the credentials of this cluster with az to use with kubectl you’ll see that we don’t have access to view anything via kubectl either.

>> az aks get-credentials -n MyAKSCluster -g MyExampleRg

Merged "MyAKSCluster" as current context in /Users/bmcconnell/.kube/config

>> kubectl get nodes

To sign in, use a web browser to open the page https://microsoft.com/devicelogin and enter the code FGDNP2KLS to authenticate.

Error from server (Forbidden): nodes is forbidden: User "user@microsoft.com" cannot list resource "nodes" in API group "" at the cluster scope

On the positive side you can already see that Azure AD is getting invoked since we are being directed to https://microsoft.com/devicelogin to get access to AKS.

Solution

Now that we’ve got a well defined problem… Let’s fix it…

Solution #1 - Create Cluster with –aad-admin-group-object-ids

The easiest way to fix this would be to have not gotten into this situation at all by specifying an AAD group when we created the cluster. As long as we were a member of that group we would have access to Kubernetes objects in the portal.

>> az ad group list --filter "displayname eq 'myAKSAdminGroup'" -o table

DisplayName MailEnabled MailNickname ObjectId

--------------- ------------- --------------- ------------------------------------

myAKSAdminGroup False myAKSAdminGroup 9943dc8b-3911-4eab-af34-8b61ac667714

>> az aks create -g MyExampleRG -n MyAKSCluster --enable-aad \

>> --aad-admin-group-object-ids 9943dc8b-3911-4eab-af34-8b61ac667714

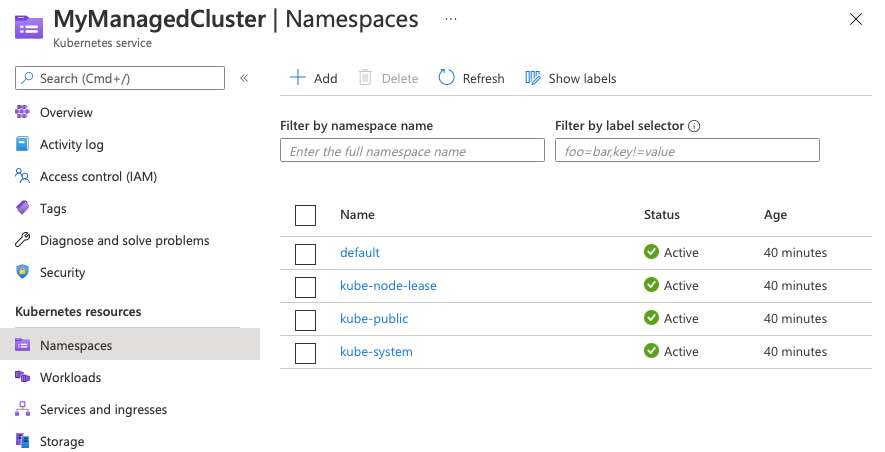

Now if we check out our cluster in the portal we’ll see the ojbects in Kubernetes.

Solution #2 - Update the Cluster with –aad-admin-group-object-ids

So now we’ll assume that you’ve already created your cluster and either don’t want to start again or can’t. You can update existing clusters to enable AAD with az aks update

>> az aks update -n myManagedCluster2 -g MyExampleRg \

>> --aad-admin-group-object-ids 9943dc8b-3911-4eab-af34-8b61ac66771

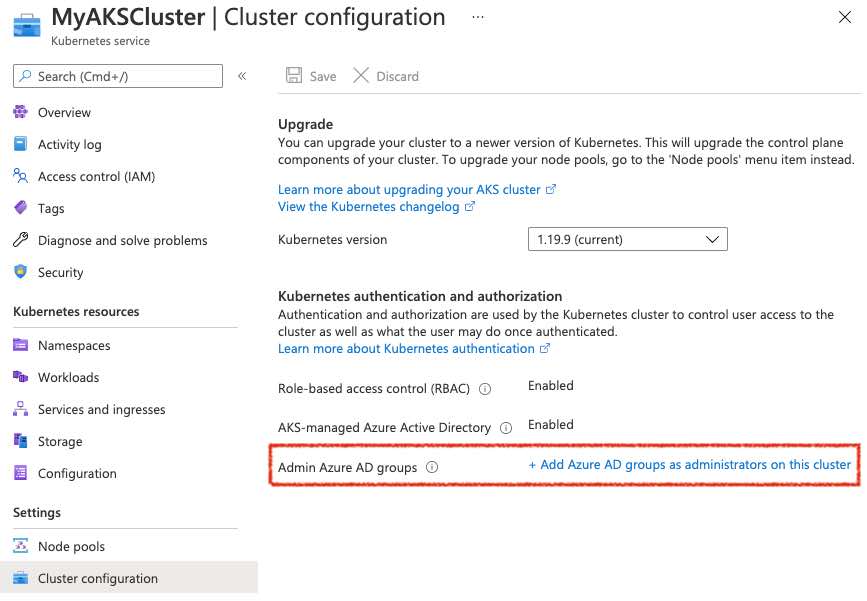

This can also be updated via the Azure portal by browsing to the AKS cluster object and selecting Cluster configuration from the left navigation menu and adding the correct Azure AD Group for Admin Azure AD groups

Solution #3 - Add ClusterRoleBinding to AKS Cluster

If we scratch a little deeper into what’s going on in AKS when we enable AAD integration we’ll find that a ClusterRole and ClusterRoleBinding is being created when we use –aad-admin-group-object-ids. For instance if we use kubectl to investigate we’ll find a clusterrolebinding called aks-cluster-admin-binding-aad

kubectl describe clusterrolebinding aks-cluster-admin-binding-aad

Name: aks-cluster-admin-binding-aad

Labels: addonmanager.kubernetes.io/mode=Reconcile

kubernetes.io/cluster-service=true

Annotations: <none>

Role:

Kind: ClusterRole

Name: cluster-admin

Subjects:

Kind Name Namespace

---- ---- ---------

Group 9943dc8b-3911-4eab-af34-8b61ac667714

One thing you may have noticed is that there is no equivalent flag on az aks create to enable access to user objects… only group objects. So if you are in a situation where you are creating a personal cluster and don’t want to enable group access, you can still use AAD integration but you’ll need to add your own clusterrolebinding.

If you have not setup RBAC when you try to use az aks get-credentials with AAD integration turned on you will not be able to use kubectl without passing the –admin flag to get_credentials. As long as you have Contributor access to the AKS cluster you can use the –admin flag to get credentials without having to use AAD (As of May 2021. This may be changing in the future).

If we don’t want to give an entire group access via AAD we can add a clusterrolebinding to our cluster that will provide access to a particular user.

>> cat aks-cluster-admin-binding-aad-user.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: aks-cluster-admin-binding-aad-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: User

name: user@microsoft.com

>> kubectl apply -f aks-cluster-admin-binding-aad-user.yaml

clusterrolebinding.rbac.authorization.k8s.io/aks-cluster-admin-binding-aad-user created

So the above clusterrolebinding is referencing an existing clusterrole in AKS called cluster-admin. This role provides access to all resources cluster wide.

kubectl describe clusterrole cluster-admin

Name: cluster-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

*.* [] [] [*]

[*] [] [*]

Now at this point the user defined in the aks-cluster-admin-binding-aad-user.yaml file above will have access to view the Kubernetes resources in the Azure portal.